- #Cloudberry backup weekly daily monthly software

- #Cloudberry backup weekly daily monthly code

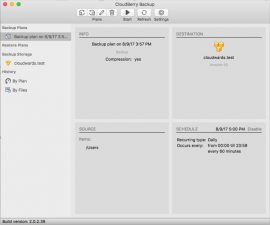

- #Cloudberry backup weekly daily monthly mac

#Cloudberry backup weekly daily monthly mac

Here’s a brief overview of some of the things you should consider when choosing Mac backup software:

#Cloudberry backup weekly daily monthly software

There are countless different paid and free Mac backup software tools to choose from, each offering a unique set of features and capabilities. IDrive Business – $99.50 per year for 250 GB or $199.50 per year for 500 GB or $499.50 per year for 1.25 TBĭropbox Family – $16.99 a month for 2 TB shared between up to 6 usersĭropbox Business Standard – $12.50 per user per month for 5 TB when billed annuallyĭropbox Business Advanced – $20 per user per month for unlimited storage when billed annually IDrive Personal – $69.50 per year for 5 TB or $99.50 per year for 10 TB Multiple computers – from $24 per month when billed annuallyĭepends on which cloud storage service you choose One computer – from $6 per month when billed annually Unlimited plan – $7 per month per computer or $70 per year per computer or $130 per two years per computer Sure, it might be needed rarely, but when it’s needed, it’s really needed.Limited only by the size of your backup drive When working with production systems, recovery from different kind of problems is very important. In worst case some ultra badly coded program, could just continue working as normal, but restore would fail. Basic works if it happens to work, software says, yuck something is wrong and that’s it. Any properly working production quality software should be able to recover from that. In both cases, restoring the state in the local database and checking backup integrity should be completed, because now there’s clear mismatch between the local database, and remote files.ĭepending on recovery codes quality, such situation causes “total failure” or just slow recovery without problems. Restore backup files in the remote storage.Backup backup files in the remote storage.

#Cloudberry backup weekly daily monthly code

There’s nothing new with GC processes, and those won’t cause data corruption.Īlso situation where data blobs and local database are out of sync, is recoverable in logical terms, but I doubt that Duplicati’s code is able to do it correctly.Īs programmer, I understand that recovering from some cases is way annoying, but as data guy, I say of course it should recover because there’s no technical reason why it wouldn’t be possible.Įxample cases, which are annoying to deal with, but technically shoudln’t be a problem at all:īit different approach to the artificially created mismatch: Transaction compaction doesn’t cause any issues, if it works correctly. I would simply choose something different. If I wouldn’t really like the software, I wouldn’t bother complaining about the issues. Duplicati features are absolutely awesome. We’re not there with the Duplicati yet.Īnyway, as mentioned before. And it’s joyfulyl rare, that file system becomes absolutely and irrecoverably corrupted. Sure I’ve had similar situations with ext4 and ntfs, but in most of cases, that’s totally broken SSD or “cloud storage backend”. As well as recovering from that, has been usually nearly impossible. And at times it’s also blown up without the hard reset. Currently the backup works out mostly ok, but you’ll never know when it blows up. And if the software is really bad, then the file system gets corrupted during normal operation even without the hard reset. If system is hard reset, journal should allow on boot recovery and even if that fails, there should be process to bring the consistency back, even if that wouldn’t be as fast as the journal based roll forward or backward. FIrst the primary issue should be deal with efficiently and automatically, and even if that fails, then the secondary issue process should be also automated or manual, but work in some adequate and sane way.Īs example if we use file system or databases.

On production ready software both of those recovery situations should work. First something goes slightly a miss, and then the recovery process is bad, and that’s what creates the big issue. Or when the restore takes a month instead of one hour and still fails.īut many of those issues are linked to secondary problems. You don’t ever know, when you can’t restore the data anymore. > Which just means that over all reliability isn’t good enough for production use. I see problems every now and then, and I’ve got automated monitoring and reporting for all of the jobs.īut there are fundamental issues, and things which really shouldn’t get broken, do get broken, as well as the recovery from those issues is at times poor to none. As well I try to test the backups around monthly, I’ve fully automated the backup testing. I’ll run 100+ different backup runs daily.

0 kommentar(er)

0 kommentar(er)